Cognitive EW

The leap towards cognitive technology is one of the most exciting trends in EW today.

Programs such as DARPA’s Adaptive Radar Countermeasures (ARC) and Behavioural Learning for Adaptive Electronic Warfare (BLADE) are among the first examples of this new generation of Cognitive EW technology.

They promise to keep EW one-step ahead of threat systems that are operating across wider bandwidths and feature much better RF agility/adaptability than previous generation, enabled by massive use of digital waveform generation and processing.

However, as the EW community forges ahead with cognitive EW technology development and exploring new cognitive systems concepts, it is clear that we are just beginning to scratch the surface of what cognitive technology will mean not only to EW, but also to all the areas of defence electronics and to all operations within the EM Spectrum (EMS).

The idea is bio-inspired or better, human-inspired and seeks to emulate a cognitive and autonomous approach to the environment in terms of observing, orienting, deciding, acting and applying experience.

Cognition is defined as the conscious intellectual activity performed by the human being to: changing preferences, applying knowledge, processing information, learning, applying experience, imagining, reasoning and (finally) thinking.

Autonomous means that a machine (and not a human) “somehow” performs those functions in tiny fractions of a second.

Traditional approaches already are able to emulate activities such as changing preferences, applying knowledge, processing information, while the new frontier is to go beyond, giving the spectrum Dependent System some capability to learn and then apply experience.

As well known, the mathematical-engineering discipline that aim to create an intelligent agent that is a machine emulating the human behaviour, is commonly identified as Artificial Intelligence. Nevertheless, under this terminology, many specific disciplines are categorized, each one typically dedicated to one specific aspect of the human behaviour that it is assumed to be emulated.

It is clear that, when applied to EW, we are still at the beginning.

However, there are some potential applications that can be envisaged and that can take advantages of the increased computational capability already offered by FPGA, CPU, GPU, parallel computing and cloud architectures.

• Cognitive Signal Classification

on July 31st, 2017, the Machine Learning 4 SETI Code Challenge (ML4SETI), created by the SETI institute (Search for Extra-terrestrial Intelligence) and IBM, was completed.

Nearly 75 participants, with a wide range of backgrounds, from academia to industry, worked in teams on the project.

The project challenged participants to build a machine-learning model to classify different signal types observed in radio telescope data for the search of extra-terrestrial intelligence.

Several classes of signal were simulated (and, thus, labelled), with which scientists trained their models.

The SETI measured the performance of these models with test sets in order to determine a winner of the code challenge.

The model from the top teams, using Deep Learning techniques, attained nearly 95% accuracy in signal classification, including some signals with very low signal-to-noise ratio, theoretically unclassifiable with traditional imperative rules.

There are many analogies between this case and the primary issue of an ESM system, which performs signal detection-discrimination-classification-identification in non-cooperative conditions.

However, many differences are also evident: the background is quasi stationary in the universe, while this is not in typical electromagnetic scenarios;

◦ the search of intelligent extra-terrestrial electromagnetic activity is based on the persistency of a new signal for a certain time, while many times ESM are looking for fugitive threat;

◦ the requirement in terms of processing time are quite different, where EW reacts in real-time or quasi real-time, even if the amount of data to be processed is considerably less than the transmission from the universe.

Anyway, these are not impeding issues and the application of deep learning to electromagnetic signal classification for EW application has already successful stories.

The real issue, from our perspective, is the availability of data needed to train the A.I. based engines whatever the branch of A.I. we want to apply and this imposes methods and procedures at User level for the success of the enterprise.

• Cognitive Texture Classification

Texture classification is the problem of classifying according to their textural cues. Crucial to the success of texture classification are:

(1) the identification of features that differentiate textures in an image and developing their representation for further classification and

(2) the construction of classification paradigms that operate on the above representation and discriminate between texture features associated with different texture classes.

Accordingly, the texture classification problem is conventionally divided into the two sub-problems of feature extraction and classification.

Related to (1), many methods have been developed to extract textural features, which can be loosely be classified as statistical, model-based and signal-processing methods. Every method has pros and cons and are often related to the field of application.

Signal processing methods, also known as multichannel filtering methods, are attractive due to their simplicity.

In these methods, a textured input image is decomposed into feature images using a bank of filters, such as wavelet, statistical or neural network-based filters.

As a result, a high-dimensional textural pattern can be represented by a relatively small set of features that need to be extracted using a set of well-selected filters.

The major issue for this approach is the selection of a good set of filters for a given texture classification problem, but they found a natural application in solving advanced fingerprinting problems in dense electromagnetic environment, where unwanted modulation are exploited to map different signals in different textures.

Related to (2), from Bayes classifier to neural networks, there are many possible choices for an appropriate classifier.

Among these, Support Vector Machines (SVM) would appear to be a good candidate because of their ability to generalize in high-dimensional spaces, such as spaces spanned by texture patterns. The appeal of SVMs is based on their strong connection to the underlying “statistical learning theory”: that is, an SVM is an approximate implementation of the Structural Risk Minimization method and many papers can be found in open source literature.

• Cognitive Event Classification

An emerging requirement in many fields of human sciences (including medicine, politics, business and many others) is the capability to “understand” events.

Some systems need to classify events into types, others need to listen for specific event, and some need to predict events.

These requirements often involve assigning a score to an event, based on the advantage/disadvantage of its occurrence, and then ranking all the events according to the assigned score.

These event understanding problems are classic “Classification and Regression” requirements, hidden behind the word “event”, for which A.I. offers a lot of applicable models (LSTM/ GRU/RNN and DNN1, when the sequence of events form a pattern can be graphed/seen).

At the basis, there is the capability to turn correctly and completely an event into a set of features that an A.I. engine can use and on which can be trained.

Again, defining and establishing an “ontology” is a key step to formulate correctly a problem, hoping that the A.I. engine would be able to solve.

“Anomalous behaviour detection and classification” is an immediate application.

This rely to the capability of passively detecting and tracking objects (i.e. ships) through their electromagnetic radiated signals and autonomously classifying standard behaviours versus non-standard or anomalous routes.

• Cognitive Jamming

Cognitive Jamming is a leap forward in the context of Electronic Warfare.

The adjective “Cognitive” implies the concept of awareness to the threats and the ability to adapt Jammer’s behaviour to the states and operative modes of the threats.

The behaviour of a traditional Electronic Attack system usually obeys the following pattern:

◦ The threat is detected and its fundamental parameters are measured.

The EM environment is typically very dense. The problem of detecting the threat and measuring its parameters is not trivial and it is also computationally intensive

◦ The threat is identified (associated to an existing and known weapon system / platform)

This step is usually performed using an “Identification Library” that has been prepared during the “Mission Preparation” step and contains the threats that the System is likely to meet during the Mission

◦ If the System needs to perform Electronic Attack against the Identified threat an appropriated “Jamming Program” is selected.

The “Jamming Program” is picked from the “Jamming Library” using the Identification (previous step result) as a key.

◦ The selected “Jamming Program” is executed.

During this step the Electronic Attack is performed.

The Jammer is able to handle a limited range of expected events (i.e. tracking lost events, cinematic parameters updates, and operative mode changes).

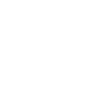

The described steps are summarized in Figure 1.

Figure 1: Traditional Jammer behaviour

A Cognitive approach is applicable to both parts of the diagram (Sensor and Actuator).

For the Actuator part, which is considered the real core of an Electronic Attack System, let us dive into the “Jamming Program Selection” block.

This block relies heavily on a carefully built “Jamming Library” which contains a collection of Jamming Programs carefully designed for being effective against well-known (Identified) threats.

Applying the cognitive mind-set to this block can lead to synthesizing in real time the most appropriate Jamming Program (JP) against a given threat: this new approach implies some degree of “smartness” that must be brought into the System and that depends on some key technology and methodology.

It appears evident that Systems that learn from experience are heavily based on Artificial Intelligence and Machine Learning concepts.

In particular, the concepts of relation between the experience and the task, which must be performed, is well captured by a highly quoted definition given by Tom M. Mitchell:

“A computer program is said to learn from experience E with respect to some class of tasks T and performance measure P if its performance at tasks in T, as measured by P, improves with experience E.”

(Mitchell, T. (1997). Machine Learning. McGraw Hill. p. 2. ISBN 978-0-07-042807-2.)

In case of “JP Selection” block highly skilled operators traditionally perform the task of designing the Jamming Program, which is most effective against a given threat; the construction of a model which performs this task adds to the jammer the ability to be adapted to environmental changes in a faster way and makes it more cognitive than ever.

Of course, the same approach can be applied to other blocks in Figure 1.

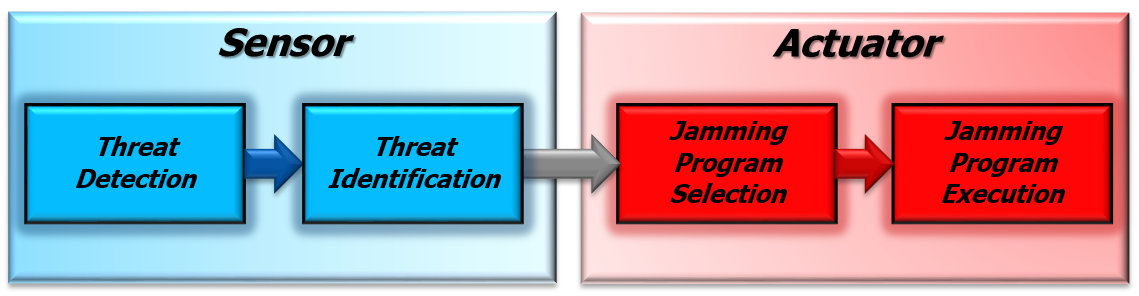

For instance, the “Threat Detection” and the “Threat Identification” are traditionally based on some form of explicitly defined waveform taxonomy that is supposedly able to represent RADAR emitted signals.

emitted signals.

This problem is far from being easy.

In fact, the environment is typically very dense and composed of RADAR instances potentially similar to each other in terms of Frequency, PRI and PW.

Additionally, modern multifunctional RADARs are difficult to be framed in any existing taxonomy since they lack of pulse periodicity (or PRI agility), which is typical of older RADARs.

Removing taxonomy constraints requires that a machine learning-based paradigm be leveraged to perform the “threat detection” phase.

• Cognitive approach in EW Command and Control

Generally, the C2 environment is not the ideal place for expert systems, as these systems function best in applications that are deductive and well bounded, and enjoy a host of human experts. There are, however, specific applications that fall within the bounds of control mechanisms that meet these prerequisites.

These expert systems are embedded usually into real-time military applications, typically as part of a weapon or weapons platform, and perform such military-specific functions as battle management, threat assessment, and weapons control.

As the battle-space becomes more complex and the requirement for faster decisions and reactions increases, there will be a growing need for automated expert systems for functions as sensor interpretation and “Automatic Target Recognition-Classification-Tracking”, based on anomalous behaviour detection systems, trained on “standard” behaviour data.

Other A.I. systems, known as “Decision Support Systems”, have broader applications in the C2 environment. This is because they assist in the organization of knowledge about ill-structured issues.

The emphasis is on effectiveness of decision-making, as this involves formulation of alternatives, analysis of their impacts, and interpretation and selection of appropriate options for implementation.

These systems aid humans in mission planning, information management, situation assessment, and decision-making.

A particularly relevant application is in the area of “Data Fusion”, where A.I. advancements in the fields of natural language, knowledge discovery and data mining are assisting in the analysis and interpretation of the vast quantities of (unstructured/structured, synchronous/asynchronous, formatted/unformatted) data collected by the ever-increasing number of intelligence, surveillance, and reconnaissance assets.

This cognitive approach is rapidly overcoming the traditional approach based on rules, maximum likelihood and correlation among elements.

In addition, A.I. contributions to “Game Playing” and simulation are also leading to better training for personnel who must perform within the demands of the C2 environment.

The prominence of this technology will continue to increase with the demands that will be imposed on commanders and their C2 architectures in the complex and unpredictable battle-space of the future.

A.I. advances in human/machine interface capabilities will also help to close the gap between humans and the machines that support them. The new trend is “Human-Machine Teaming”, where the machine learns from the past events, decision of the human, and increase its experience and probability of success in the future (proposed/autonomous) decisions.

The still controversial question lies in whether A.I. technology can advance to the point where this gap no longer exists, effectively allowing machines to replace humans and perform with human intelligence.

It is at this stage that A.I. will complete its entry in the realm of command.

When referring to COMMAND, this intelligence is key because, by nature, command scenarios will involve situations wherein it is necessary to deal with inexact or incomplete knowledge about a problem.

The solution process for these problems is what is called commonly decision-making and it is fundamental to command.

Command is primarily an intellectual exercise and is traditionally associated only with human intelligence.

Included here are such things as “creativity”, which denotes inventiveness and imagination, the capacity to learn and adapt, the ability to initiate and surprise, the facility for contemplation and reasoning, the capacity for thought and the most human of all qualities, consciousness.

These generally represent the intellectual qualities that are considered necessary to exercise command.

A.I. mathematical models for representing and generating creativity is an area of growing importance.

Until A.I. systems can be fruitfully, although not infallibly, creative, their ability to model, and even to aid, human thinking will be strictly limited, but creativity is only part of the issue.

Before a “thinking” machine, we should need a “learning” machine, capable of altering its own configuration through a series of rewards and punishments, in order to filter out wrong ideas and retain useful ones.

A.I. machines can adapt their behaviour to meet goals in a range of environments by using random variations in their behaviour followed by iterative selection in a manner similar to natural evolution.

Moreover, the goal is no longer for A.I. to merely learn but also to develop; that is, to enrich their cognitive ability to learn and extend their physical ability to apply learning (Deep Learning).

By exploiting its ability to interact with humans, A.I. based C2 can learn diverse behaviours and eventually, into the context of military operations, commanders who might not know beforehand what tasks A.I. will need to accomplish and will be able naturally and quickly to assign it a task (Cognitive Decision Making).

As an example, the communicative ability of the human brain is limited by low bandwidth input and output and the thinking power of the brain, while impressive, is rather slow.

In the C2 context, the amount of time required to train and develop human commanders is significant and yet they still are affected severely by such prevalent factors as stress and data saturation.

In these areas, as well as in more recognized areas involving human physical and emotional vulnerabilities, A.I. technologies will offer significant advantages.

Since command, by nature, is an intellectual activity that requires decision-making in a dynamic environment, these developments are promising elements for the progressive incorporation of AI technology within the command element of C2.

Meanwhile this introduction can be supported by means a progressively decreasing supervision activity thanks to the already mentioned Human-Machine-Teaming (HMT) approach.